Pulse

A downloadable game

Pulse is an experimental project that aims to explore the feasibility of using emotion recognition and emotionally-adaptive techniques to augment virtual reality experiences.

Recent advances in human-computer interaction and display and input technology have allowed game design and user experiences to reach new heights; yet, we thought it would be an interesting idea to try and take things a step further by studying whether real-time player emotions could be used to autonomously adapt games, especially in the setting of a VR environment where the player is immersed and engaged more deeply with the virtual world.

Developed as part of a student senior research and design project at the University of California, Irvine's Henry Samueli School of Engineering, Pulse applies machine learning, digital signal processing, electrical engineering, computer science, and game development techniques in its design.

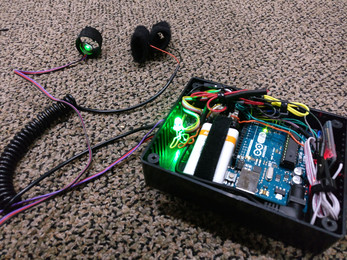

In order to recognize user emotions, Pulse relies on a biofeedback-centric approach that uses a photoplethysmography sensor and galvanic skin response sensor. These two sensors combine to collect data on user pulse and skin conductivity activity, which are continuously relayed over Bluetooth to a connected PC.

A custom-built Python program on the PC then denoises the data, mathematically extracts 11 different statistical features from the received physiological data, and uses a 20-tree random forest classifier with training data from the Massachusetts Institute of Technology's Affective Computing Lab in order to recognize whether the user is feeling fearful or calm (limited scope of emotions covered due to time constraints).

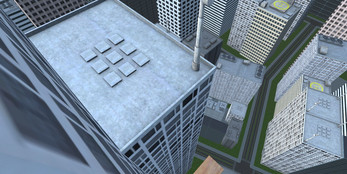

These emotion results are finally communicated to a TCP-connected VR experience designed in the Unity engine, which adapts to the recognized emotions. The VR experience that we designed takes the player up to the top of a 30-story skyscraper and challenges them to walk along a plank suspended high in the air (simulated by a corresponding physical plank in real life).

If the system determines that the player is feeling afraid, their elevation will gradually decrease in order to calm them down, whereas if the system determines that the player is feeling calm, their elevation will increase in hopes of increasing their fear/anxiety. This VR experience was intended not only to demonstrate that emotion recognition and emotionally-adaptive techniques can be used to adapt games, but also to show that they could potentially be used for a secondary purpose: therapy.

For our work on this project, our team won the 2017 Henry Samueli School of Engineering Dean's Choice Award and a poster and abstract publication to the University of California eScholarship directory.

Our team is as follows...

Engineering and Game Design:

- Andrew Tran

- Reigan Alcaria

- Jude Collins

- Jong Seon "Sean" Lee

Audio Director:

- Brandon Pieterouiski (Berklee College of Music)

Mentors:

- Professor Ahmed Eltawil

- Ahmed Khorshid

For more information on our project, feel free to visit our development blog here.